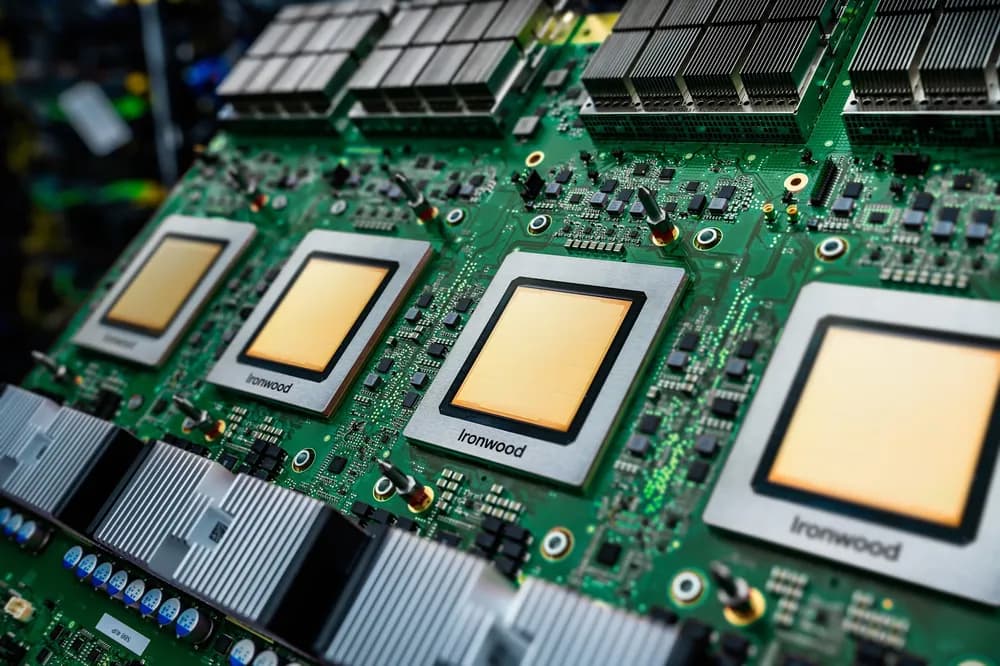

Ironwood TPU Google’s Next‑Gen AI Accelerator

A deep dive into Google Cloud’s seventh‑generation TPU, Ironwood — designed for large‑scale, low‑latency AI inference and model serving.

Overview

Ironwood is the seventh‑generation Tensor Processing Unit (TPU) from Google Cloud — designed to accelerate inference and model serving for AI workloads at unprecedented scale and efficiency.

Why Ironwood Matters

- Built for inference at scale: Optimized for high-throughput, low-latency inference for real-time AI applications and generative AI models.

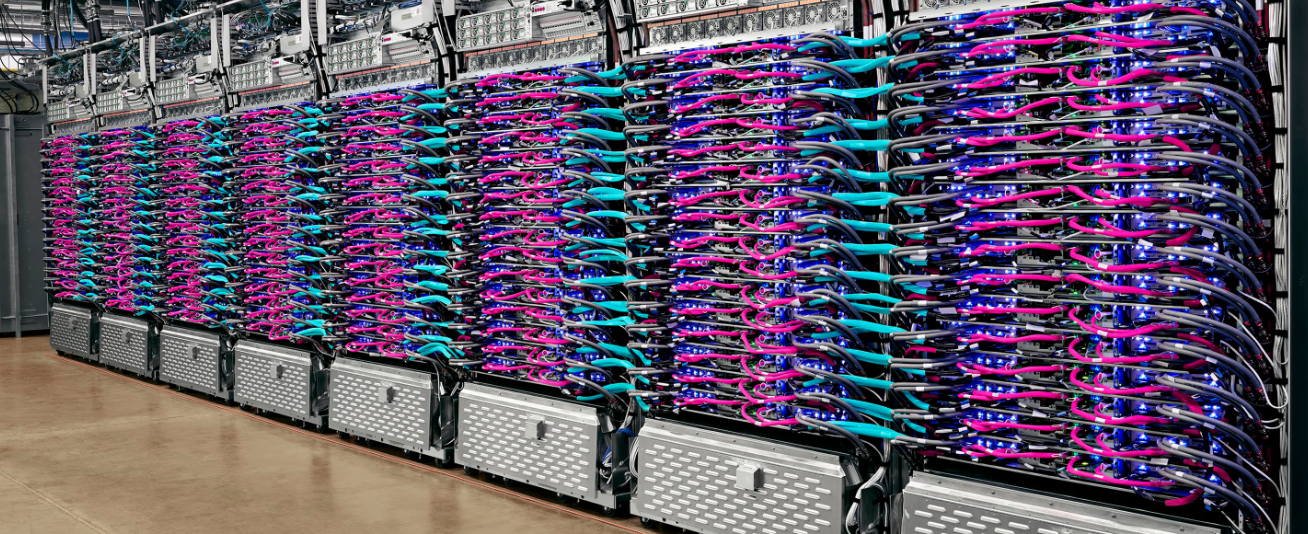

- Massive scale and performance: An Ironwood superpod can connect up to 9,216 chips, delivering 42.5 exaFLOPS of compute.

- Efficiency & energy savings: Delivers more than 4× better performance per chip compared to the previous generation.

Key Features & Architecture

1. Purpose‑Built for Inference

Ironwood prioritizes efficient, scalable inference over raw training performance, supporting real-time responses for AI applications.

2. Massive Parallelism

A single Ironwood superpod integrates up to 9,216 TPU chips connected through a high-bandwidth interconnect, providing 1.77 PB of shared memory. This creates a cohesive “AI hypercomputer” for large-scale workloads.

3. Co‑Designed for AI Workflows

Ironwood’s hardware and software stack were co-designed with AI researchers to optimize performance, efficiency, and memory access for large models.

Implications for Developers and AI Researchers

- Faster, cheaper inference at scale: Supports millions of requests with low latency and high efficiency.

- Cloud-based supercomputing: Simplifies deployment of AI models without managing on-prem hardware clusters.

- Better performance for large models: High-bandwidth memory and interconnect reduces bottlenecks.

- Optimized integration with ML frameworks: Supports frameworks like JAX and PyTorch for seamless deployment.

What We Know and TBD

| Known | TBD |

|---|---|

| Up to 9,216-chip superpod delivering 42.5 exaflops | Pricing and cost-per-inference for various workloads |

| 4× per-chip performance improvement vs prior generation | Real-world performance for diverse AI models |

| 1.77 PB shared memory with high-bandwidth interconnect | Availability across regions and cloud tiers |

| Integration with major ML frameworks | Long-term roadmap and specialized workload support |

Conclusion

Ironwood represents a major advancement in AI infrastructure, focusing on scalable, efficient inference rather than just training. It provides massive compute, high memory bandwidth, and streamlined integration with AI frameworks, making it a foundational platform for next-generation AI applications.